As Turkey’s leader in AI and IoT technologies, KoçDigital continues to bring Generative Artificial Intelligence (GenAI) technology to companies, accelerating their digital transformations and growth journeys.

Within the GenAI wave we see lots of new concepts surfacing; some fade away quickly, some persist. LLM-Agents have been around for a while and claimed success in various settings. We will take a closer look at the concept, cover some use cases and discuss to what extent they are useful.

LLMs were widely received as doing incredible things when they first came along, however over time we were able to see their shortcomings: Hallucinations, lack of access to up-to-date and relevant information, inadequate performance in tasks which require multiple steps and different approaches. LLM-Agents grew as a framework to address such issues. The idea is to use a multitude of LLMs to take care of well-defined small-scale tasks, which work together to perform complex tasks.

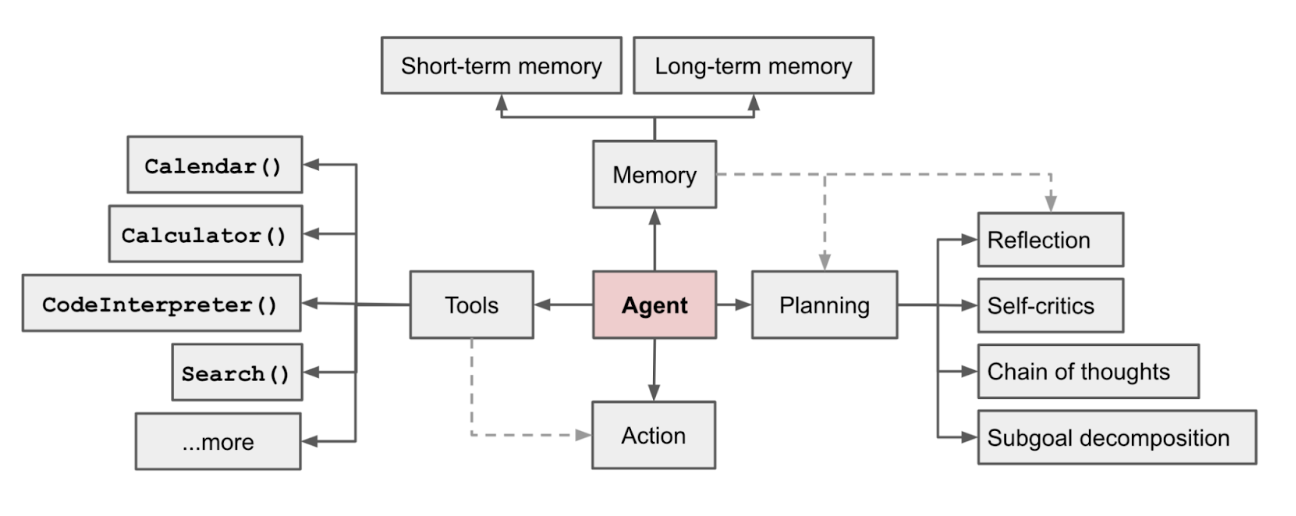

Agent-based reasoning and problem solving has been well-studied in the cognitive sciences and LLM-Agent frameworks borrow from this literature.1 Each agent is a specifically prompted LLM, with access to various tools such as a code interpreter, web search, API access to perform queries and take actions. In the diagram below we see the general structure of an LLM-Agent. The “Planning” part refers to the specific prompt where the agent is asked to perform various steps in reasoning such as reflection and subgoal decomposition. To successfully follow through multiple steps required for problem solving, the agent may also need to remember parts of the process and “Memory” takes care of that.

Depending on the use case, we can use a single LLM-Agent with access to all the tools and a complex set of prompts or we can have multiple LLM-Agents with different capabilities and goals. Langchain, LlamaIndex, MS AutoGen, CrewAI and various other tools provide the technological infrastructure to implement LLM-Agents.

Andrew Ng has been a strong proponent of agentic workflows and has suggested various approaches.2 The most striking use case seems to be coding assistants where LLM-Agents lead to considerable improvements.3 In the context of coding, execution and testing can be automated which allows LLM-Agents to achieve good results. We are yet to see a breakthrough offered by LLM-Agents in other cases, but they provide incremental gains.

In practical applications, we encounter complex sets of business constraints and goals. Even though they can usually be handled by prompting, this approach renders projects difficult to maintain and transfer of expertise to new projects becomes cumbersome. Our experience at KoçDigital has led us to use LLM-Agent frameworks even when a particular project may not require the full power of LLM-Agents. We benefit from a more streamlined approach that is easier to maintain and re-implement through LLM-Agents.

Another opportunity presented by LLM-Agents is the ability to innovate with LLMs, without training the LLM itself. It is very prohibitively expensive to innovate at the model level. Training for an LLM requires a lot of compute power, data and expertise. LLM-Agents on the other hand, can be used to leverage a particular expertise in a domain to obtain great results.

1. https://arxiv.org/pdf/2309.02427v3

2. https://www.deeplearning.ai/the-batch/tag/letters/

3. https://www.youtube.com/watch?v=aA-HVPmjt3c