Why AI Ethics? In the age of Big Data, society is being shaped by the analysis and applications of data. Data Science and Artificial Intelligence already changed our world. It is crucial to evaluate the impact of the applications in terms of the effect on societal values. The drawbacks and the benefits should be assessed in terms of framework, impact, and methodology. These applications are already in our daily lives encompassing fields in manufacturing, retail, finance, health care, human resources and recruiting, customer service, agriculture, and education.

Due to these concerns, a field of study known as ethical artificial intelligence has emerged. Among the widespread characteristics that ethical artificial intelligence applications should possess are:

-

Privacy and Security: Protection of privacy and the security of crucial personal and business information are becoming more challenging as AI systems become more prevalent. This principle is concerned with informing the users about how and why their data will be used and stored.

-

Transparency: While AI systems make decisions that help businesses tremendously, it is a necessity to be able to explain the components of the AI system and the intended use of its outputs when making judgments.

-

Robustness and Safety: It is essential that AI systems function consistently, safely, and under both expected and unforeseen circumstances. AI system design should include testing before deployment, and maintenance after deployment. These are important to assure AI systems behave consistently and reliably under a wide range of conditions.

-

Diversity and Fairness: The AI system should prioritize diversity, participation, and inclusion, encourage all voices to be heard, treat all individuals equally, and safeguard social equity. When AI systems assist with medical care, loan applications, or employment, for example, they should make the same suggestions to everyone who has identical symptoms, financial circumstances, or professional skills.

-

Accountability: AI system designers and implementers are responsible for how their systems function. Development of norms such as the protection of data and identification and mitigation of any potential risks constitute organizations responsibilities for accountability

What has been done?

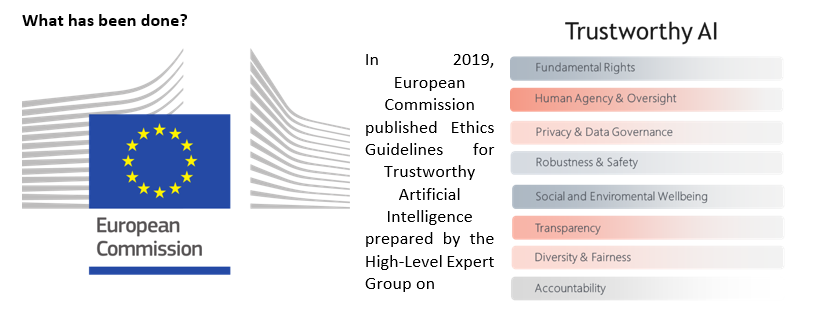

Artificial Intelligence (AI HLEG) and the OECD’s 36 member countries, along with Argentina, Brazil, Colombia, Costa Rica, Peru and Romania, signed up to the OECD Principles on Artificial Intelligence have the backing of the European Commission. A trustworthy approach is a key to enabling ‘responsible competitiveness’, by providing the foundation upon which all those using or affected by AI systems can trust that their design, development and use are lawful, ethical and robust. While not ‘yet’ legally binding, existing OECD Principles in other policy areas have proved highly influential in setting international standards and helping governments to design national legislation.

What about companies? Ethics in Artificial Intelligence is not just an academic curiosity, it is a necessity for the businesses. Companies who fail to operationalize data and AI ethics are doomed to fail in their technologies. To overcome this, companies need ethical standards, identification of ethical risk and mitigation plans throughout the organization. This lack of development of organizational practices within organizations causes losing trust of consumers, clients and employees. According to the Capgemini Research Institute’s Ethics in AI executive survey conducted in 2020, customers want AI systems to be more transparent and explicable (71%), and 67% expect AI-developing companies to take responsibility for any negative effects their systems may have. They are also concerned about the ethical implications, with 66% expecting AI models to be fair and unbiased. They are looking for trustworthy AI. What have we accomplished as KoçDigital thus far? In 2021, KoçDigital established its own principles based on the European Commission’s Ethics Guidelines for Trustworthy Artificial Intelligence. To evaluate how well our principles can be applied to AI projects; we prepared The Artificial Intelligence Ethical Risk Assessment Guide for Businesses in 2022 after a thorough analysis of AI impact assessment approaches. Identifying the risk and impact profile of the project and taking proactive steps to reduce risk are the key goals of this guide. As a complementary step, we developed the Trustworthy Artificial Intelligence Organizational Management Process, which aims to promote organizational communication and monitor risk throughout the project’s life cycle. KoçDigital Code of Ethics for Artificial Intelligence Dr. Ayşegül Turupcu Öykü Zeynep Bayramoğlu Computer Vision and Manufacturing Analytics Unit Data and Analytics Directorate KoçDigital